How to think about testing pricing

To A/B test, or not to A/B test: that is the question.

Given the perfect pricing model doesn’t exist, it should be no surprise that optimizing how you price your SaaS product requires constant iteration. It’s a massive growth lever. But there’s a rub. The longer you wait to pull it, the harder (and more painful) it gets. Don’t sit back, hoping things will improve on their own. Be proactive.

That’s why we changed pricing three times in one year. After sharing what we learned along the way, many people asked how we arrived at our current pricing model, specifically, “Did you A/B test it?”

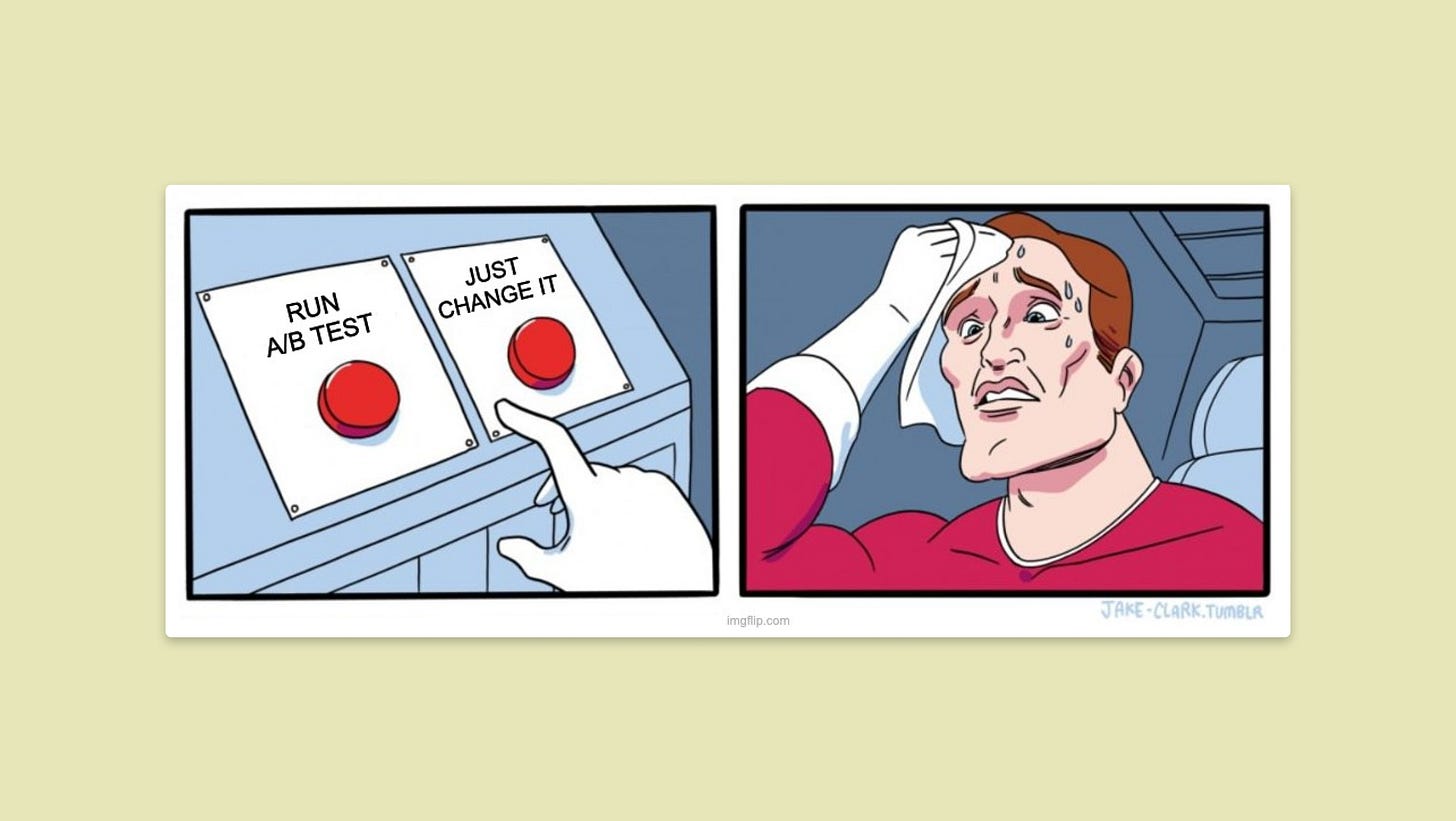

When you want to change your marketing site, product, or pricing, most people instinctively want to run a split test. It feels safer. But in reality, for most early-stage companies, split tests take far too long to run to reach any level of statistical significance. They also add complexity.

Split testing the wrong change can cost more than making the change itself. Simply put, A/B testing is not for startups, especially not for pricing.

“So, if you didn’t test it, did you just change it?”

Yes, that’s exactly what we did.

But that doesn’t mean you should never consider running a split test. In our experience, the right approach will depend on whether you’re early or later-stage.

Testing as an early-stage company

When you have a limited runway, you must move and decide fast. You probably don't have the luxury of waiting for an A/B test to reach statistical significance. This is especially true when understanding the impact of a pricing change. You need to let cohorts on new pricing age to understand the impact on expansion, contraction, and churn over time. You need the full picture to make an informed decision.

For example, in the early days of Intercom, as the product evolved, we changed pricing to better monetize the additional value of the new product we’d shipped. In doing so, pricing became more complex, which led to customers being confused about what they needed to buy. This resulted in people buying more than they needed to start, artificially increasing the initial Average Revenue per Account (ARPA). While the change looked great early on, the true impact of the changes eventually showed up in increased contraction and churn months later, detrimentally impacting Net Revenue Retention (NRR).

“But, do you really need to wait that long?”

Well, unless people flat-out stop buying and the impact of the change is obvious, yes, you should wait. And here’s the thing: what would normally take at least three months to learn if you just made the change could take twice as long if you ran an A/B test. You’re better off learning that a change is bad sooner rather than later. And if it turns out to be a good change, happy days.

“But, if you don’t run an A/B test, how will you actually know it’s better?”

At an early stage, you should be looking for big wins, not small optimizations. To know if a pricing change is 5% better, you probably need an A/B test. To know if it's 2x better, you probably don't.

Additionally, qualitative feedback from leads and customers is a strong signal in helping understand the impact of pricing that’s often hard, if not impossible, to see in the data. As we’ve shared in the past, we constantly heard people being unhappy about being forced to buy seats they didn't need. We decided to remove this requirement with our latest pricing model. We didn’t need to run an A/B test to know we achieved one of our goals there.

This is easy when you're adding tens of customers a month. It's very hard if you're adding hundreds or thousands. There'll be too many different clusters of things people say. It’s often too hard to see the forest through the trees.

Testing as a later-stage company

But what if you have the volume necessary for a split test to reach statistical significance quickly? What if you have much more revenue at risk with any pricing change? Surely, then, you should A/B test it? Maybe. It depends on your risk tolerance and whether you have the resources to set up a test. If you go down the testing route, there’s one thing to consider that will impact how you set up your test.

There are typically multiple people involved in a B2B software purchasing decision. As a result, testing pricing can be problematic because you risk stakeholders from the same company seeing different versions of your pricing. Your champion may see the A variant, and the decision maker may see the B variant. Not only does this have the potential to create confusion, but it can also erode trust, something you want to avoid at all costs when establishing a relationship with a potential customer. You can still run a split test; you just need to set things up differently—cue example.

Toward the end of my time at Intercom, I worked on a project to overhaul pricing. At that time, we were north of $100M in Annual Recurring Revenue (ARR). There was a lot of revenue at risk with any pricing change. To mitigate the risk of lost revenue, instead of rolling out new pricing to all new customers and to avoid the problem mentioned above, we tested new pricing in select countries. The US, our biggest market, was our control group, and a handful of other “lookalike” countries made up our test group. It was an imperfect test, but it allowed us to mitigate what could have been a costly change for the business.

None of this is a perfect science. But relax, it doesn't have to be. 😅 You know a lot about your business. You're consuming a million data points daily from sales calls, your customer-facing teams, and all the data you look at. It’s OK to make a gut call. You might surprised that it’s right more often than not.

The flip side is that if you feel like you broke something, you have to act on that, too. This doesn’t just apply to pricing. It applies to your product and your funnel as well. That’s why we recommend looking at your key metrics every single day.

So, just as there’s no perfect pricing model, there’s no perfect way to test pricing. If you can, our advice is to make the change, measure the impact by comparing cohorts on new pricing to cohorts on old pricing, and decide whether to keep iterating based on that. It’s imperfect, but it will allow you to learn faster.

No matter what approach you take, here are a few things we’d advise for any pricing project:

1. Know what your goal is.

As with any change you’d make to your website or product, be clear on what success looks like. It might not be increasing revenue. As we mentioned above, it might be to eradicate customer complaints and increase satisfaction. Clearly define and align on your goal upfront so you can correctly assess the impact of the change.

2. Isolate the change as best you can.

To the best of your ability, don't change pricing alongside, or in quick succession with, other big things. While A/B testing is often unnecessary, you're not helping yourself if you simultaneously launch a new version of your product with new pricing. It’ll be hard to tease apart which change impacted what.

3. Asses impact on the full funnel over time.

Pricing changes often have a domino effect. They can impact things you don’t intend them to. As with the Intercom example above, where new pricing increased ARPA but negatively impacted NRR, what might look like a win might not be when you consider churn later.

4. Make changes only for new customers first.

Most people hate change. This is particularly true when it comes to pricing. It’s also work for you to migrate customers on older pricing models to the current model. So, before you put existing customers (and your team) through that, ensure your changes have the desired result with new customers.

Like this post? You might enjoy this one, too; Your pricing page has one job.